Understanding 10 types of false information

“Don’t believe everything you see online” is a mantra that many finger-wagging parents have repeated and to which many children – young and old – have rolled their eyes.

Unfortunately, we know this is a message everyone should heed. Misinformation is everywhere online – but not all misinformation is deliberate, or created equally.

We’ve broken down misinformation into 10 categories. Each of these carries a varying level of threat, influence and intent. While misinformation is ubiquitous, it can be better dealt with by identifying which are malicious in nature and present a high-risk threat of causing panic and confusion.

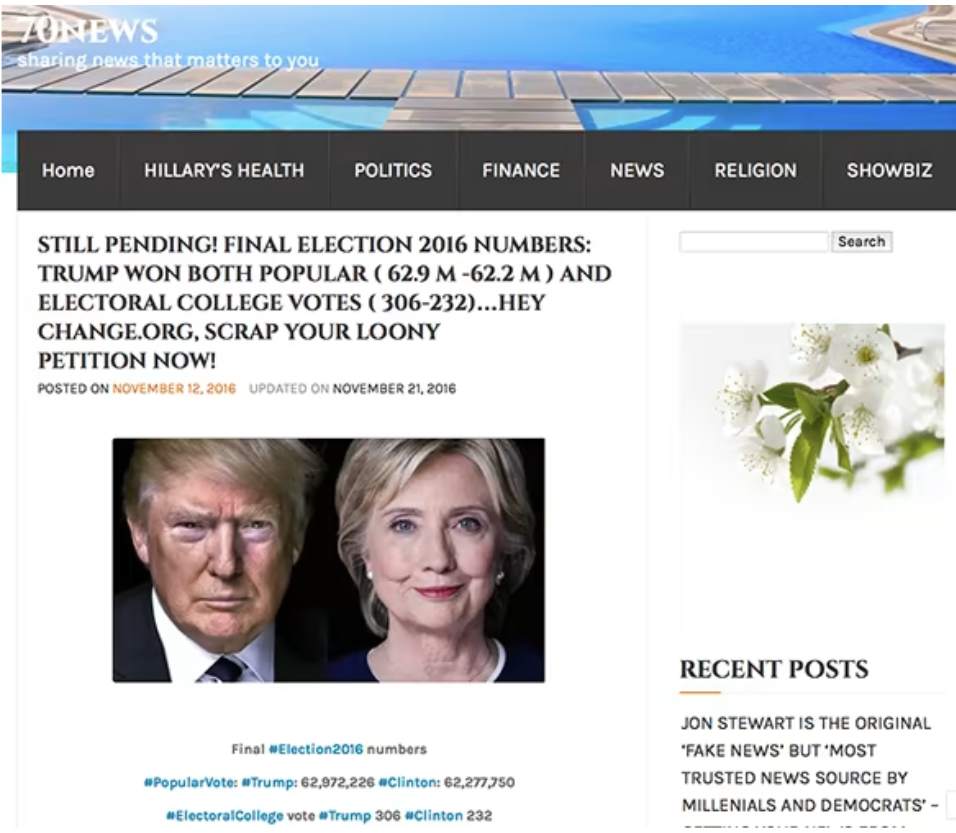

1. FAKE NEWS

Average level of risk = high

Risk rating: high

The deliberate publishing of untrue information, often disguised as news. Purely created to misinform audiences, the intention is never to report genuine facts.

Source: Financial Times

Source: Facebook Train2Go

This was debunked by AP news: AP News Fact Check

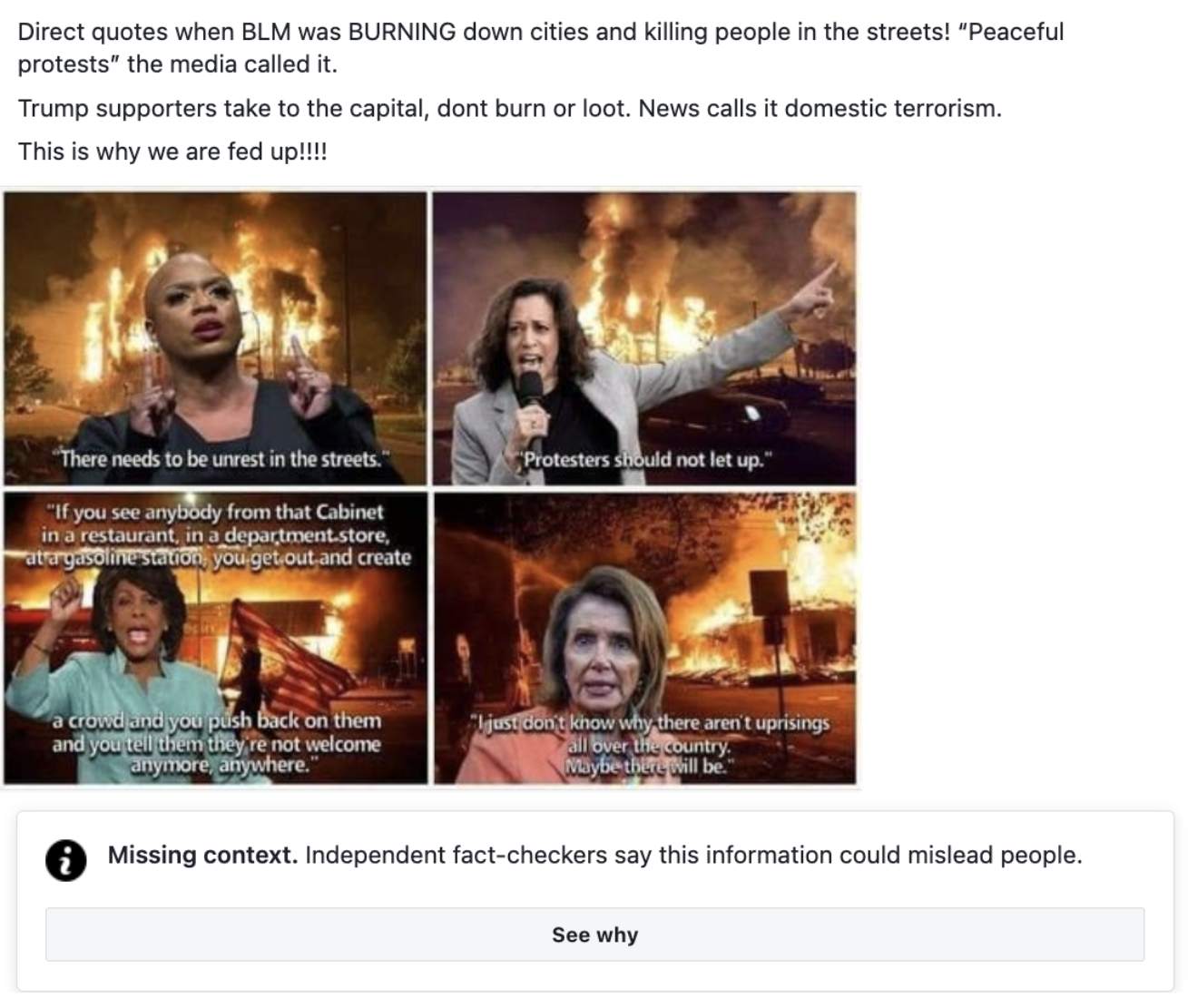

2. MANIPULATION

Average level of risk = high

The deliberate altering of content to change meaning. Includes reporting quotes out of context, along with cropping images or video to not misrepresent the true story.

Source: USA Today

3. DEEPFAKES & AI CONTENT

Risk rating: high

The use of digital technology to replicate the live facial movements and voice of another person in a video, or the use of artificial intelligence to generate an image or video. AI images, video, and audio have boomed in popularity thanks to advancements in free software.

Source: The Guardian

4. SOCKPUPPET or BURNER ACCOUNTS

Risk rating: high

Sockpuppet accounts are false accounts created by real people, to create conflict between two (or more) parties. For example, deliberately creating two fake events, both pitched at supporters of opposing political parties, to be held at the same place, time and day. This is most common with controversial figures who may use burner accounts to substantiate arguments or mobilize followers in specific ways.

Source: Jacob Rubashkin, Twitter

5. PHISHING

Risk rating: high

Schemes aimed at unlawfully obtaining personal information from online users. Malicious web links are sent to users via text, email or other online messaging platforms, resembling a genuine message from a real person or company. The personal data entered after clicking through to the malicious web link is then harvested by cyber criminals.

Source: Bleeping Computer

6. MISINFORMATION

Risk rating: medium

Typically, a combination of accurate and incorrect content. Common examples of misinformation include misleading headlines, and using ill-informed and unverified sources to support a story.

Source: Business Insider

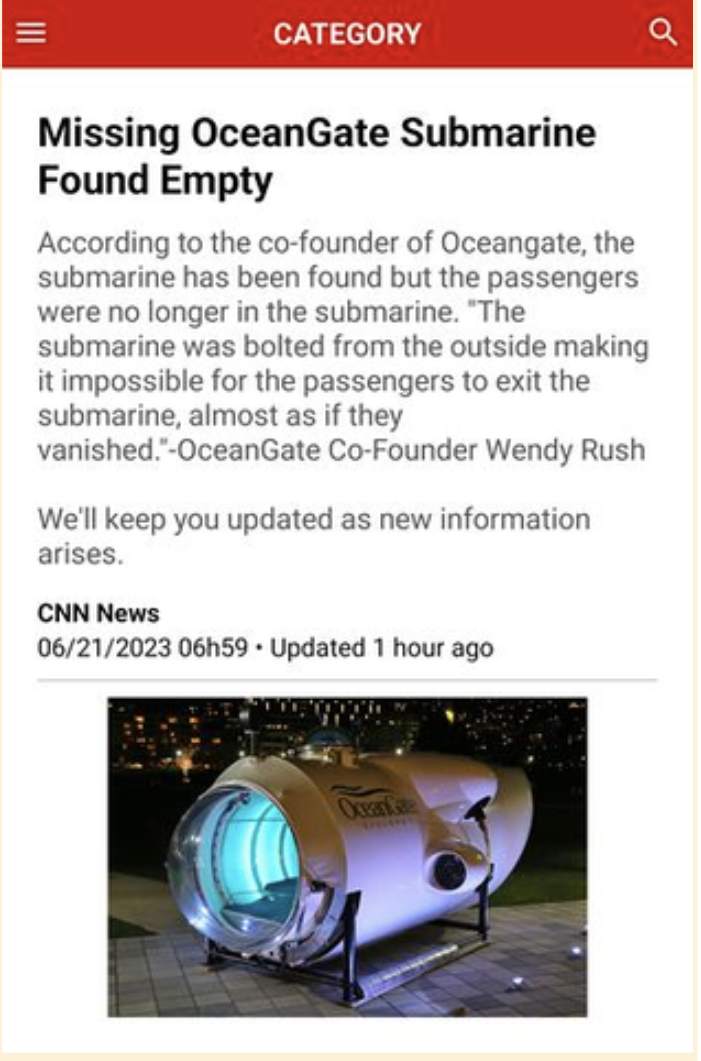

7. RUMOR

Risk rating: medium

Information shared without verification. Often occurs shortly after an incident (e.g. natural disaster or terrorist attack) when little information is known for certain.

Source: Know Your Meme

8. CLICK BAIT

Risk rating: medium

Sensationalized headlines aimed at attracting attention for readership. Each time the article is read, the author owner of the advertisement receives a payment (also referred to as ‘pay-per-click’ advertising).

Source: Know Your Meme

9. SATIRE, PARODY & ‘SHITPOSTING’

Risk rating: low

Content created for comic and entertainment purposes. Examples include online profiles that mimic an official account or person, and articles featuring dark humor. Their primary intent is to appear funny, or to go viral and gain approval from other users, not to harass or create confusion.

Source: The Onion

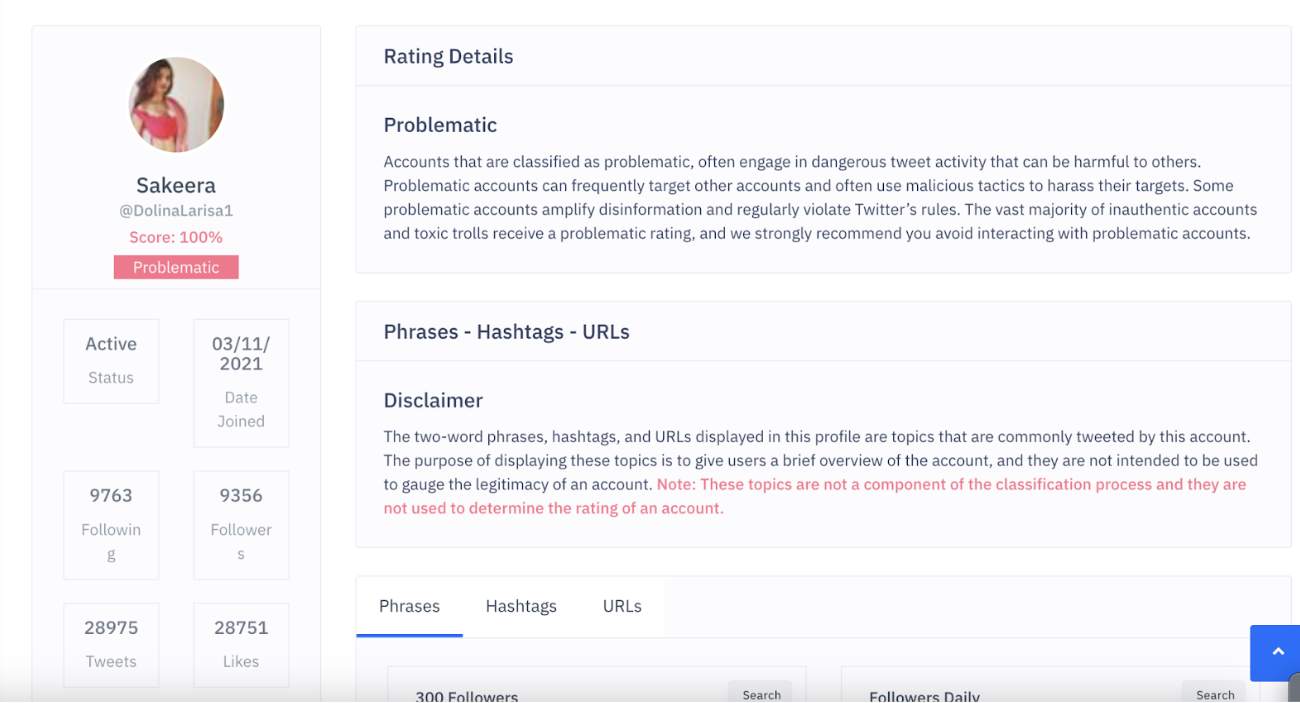

10. BOT

Risk rating: low

Bots are any automated social media account. These can be broken down into even more categories.

Some real people use automated bots to alert them when a keyword is shared online. These are used for a range of purposes from advertising, to activism, to online harassment.

Some bots are harmless, like accounts that post cat pictures every hour.

The most malicious bots are those created to influence opinions (2016 US election, Russian ‘bot’ interference). These bots are generated and run by automated technology systems (sometimes referred to as click farms or bot warehouses) and contribute to online discussions en masse. Social networks regularly remove profiles that do not appear to represent real users. However, because the profile creation process is automated, the removal rate often cannot compete with the new bot profiles constantly being added to the network.

Note that some accounts may seem similar to bots, but in fact can be real people using their accounts to achieve similar ends, similar to sockpuppet accounts.

Source: BotSentinel

Source: Twitter

Download this free resource: How to differentiate between the different types of false information

Further Reading:

Follow @socialsimulator and let us know what you think