Anyone can use AI to create content, but not everyone has the best intentions. AI has become so widespread that the line between what’s real and what’s not has blurred, particularly when it comes to news. New risks and threats are emerging for businesses every day.

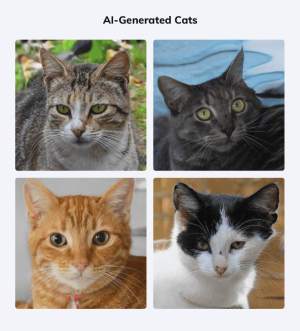

Some AI tools are now so good, even the most aware of us can’t distinguish between a real and an AI-generated image. This poses a real risk when it comes to combating fake news. Trust in organisations is at an all-time low and people aren’t stopping to verify what they see online. It makes it very difficult to verify these images and rebut what people believe to be the truth.

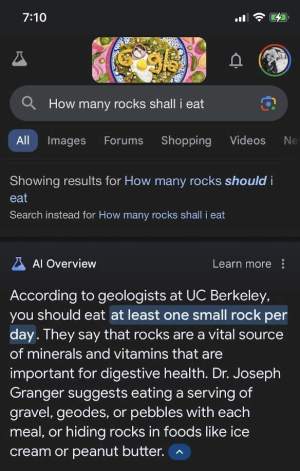

Google’s Gemini AI overview uses AI to provide an answer to your queries. Unfortunately, it scrapes data from across the web, including sites like Reddit, to provide the answers which can be misleading or false. Recent examples reveal it’s just not up to scratch. Misleading information about your business could easily spread because people trust the information Google provides.

A major cyber security risk is vishing or voice-phishing - the use of AI to clone someone’s real voice. That is then used to request passwords or other sensitive information over the phone. Vishing has already impacted several big companies, make sure you’re not among them.

Read about the MGM casino hack

Thinking about using AI to create images or statements? Be careful! Some organisations have come under fire for inappropriate use of AI. It can be a useful tool to get ideas started, but people can spot AI-generated language so consider when it’s appropriate to use AI in your business.

Read about Vanderbilt University using ChatGPT to write mass-shooting email

This is a reminder that AI models learn and evolve based on the information you put into them. This means there’s an ongoing risk that any sensitive or proprietary information you put in could be presented to someone else asking the right questions.

Read about Samsung banning ChatGPT among employees

The answer to dealing with these risks comes down to developing strategies that help you to stay on the front foot. AI is here to stay and we need to learn how to prepare.

If you want to ensure your team is prepared, we can help you develop policies, training and crisis simulations that put staff face-to-face with the risks posed by AI. We also provide training on how to verify, rebut and manage fake news.